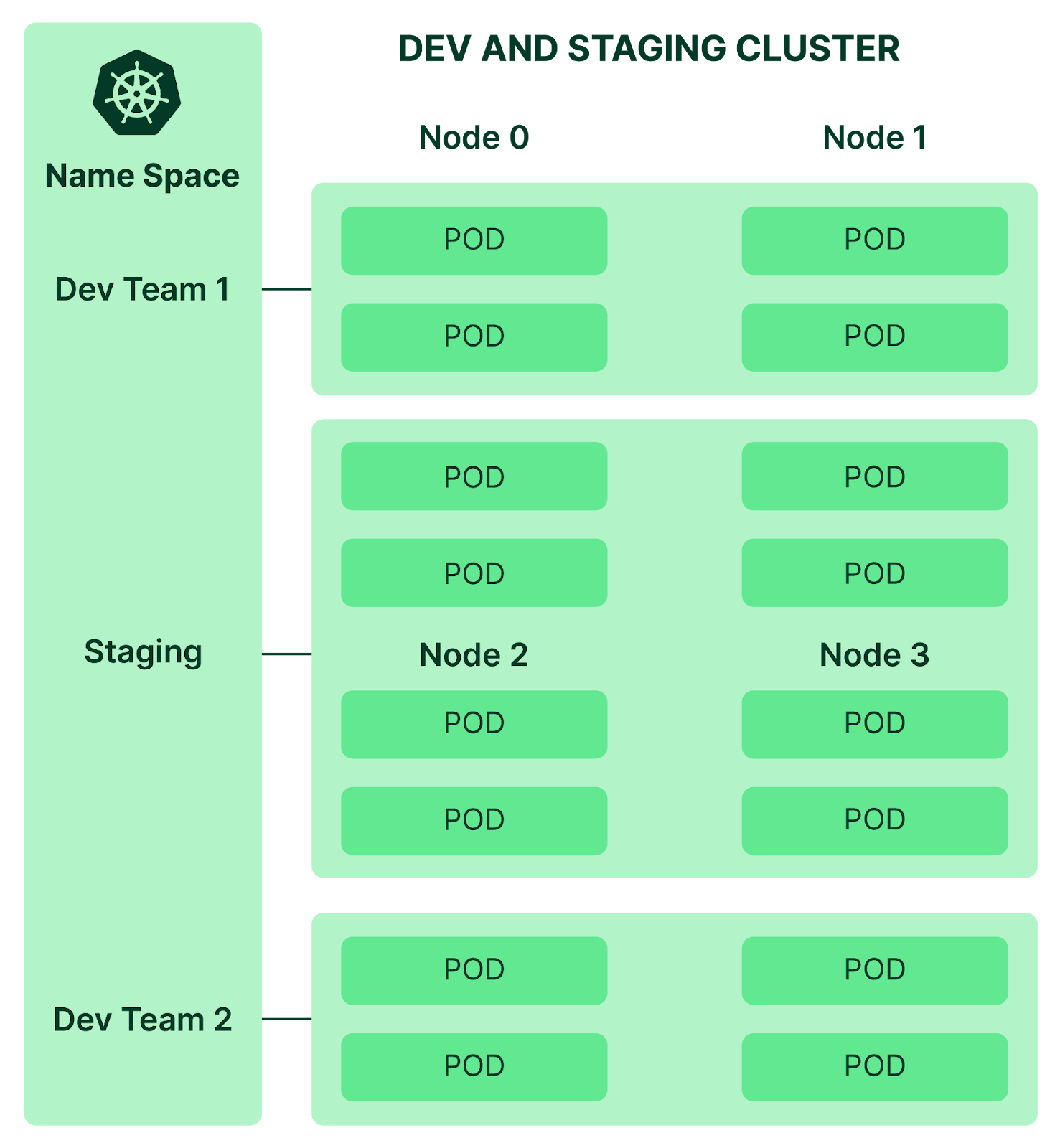

Multi-tenant and shared resources visibility

In a multi-tenant environment, using Kubernetes search APIs with custom labels allows DevOps teams to monitor the status of pods based on their environment. By utilizing key-value pairs that identify the environment, such as "environment":"dev","environment":"test","environment":"prod",

Teams can easily filter and list the status of pods. For example, the command below lists the status of all production pods:

kubectl get pods -l 'environment=prod'

Furthermore, in a multi-tenant setup, understanding the services used by a particular tenant is essential. By filtering based on the "tenant" label, such as "tenant": "account134", and further refining with tier labels like "tier": "backend" and "tier": "frontend" teams can collate data specific to that tenant's usage.

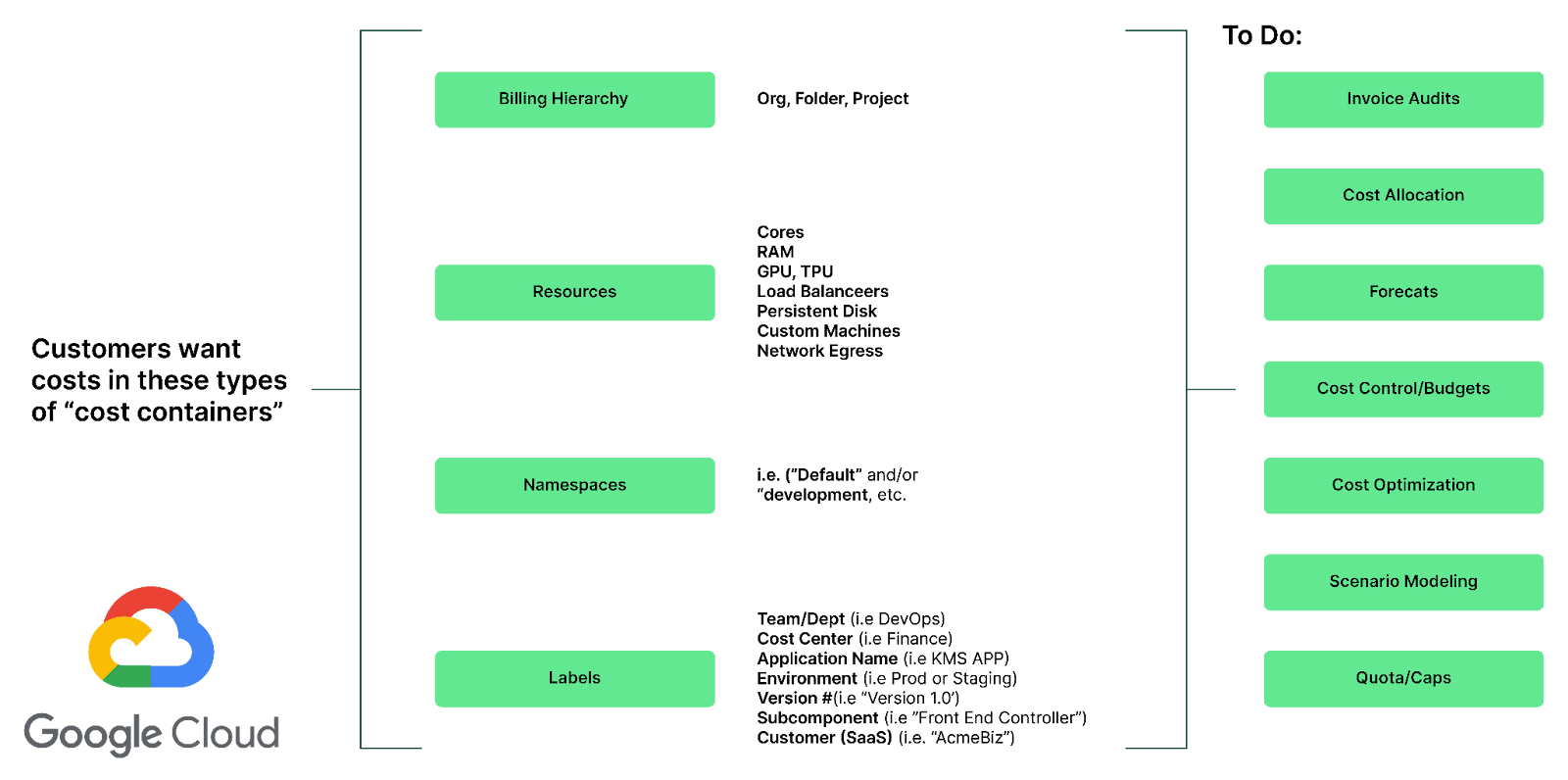

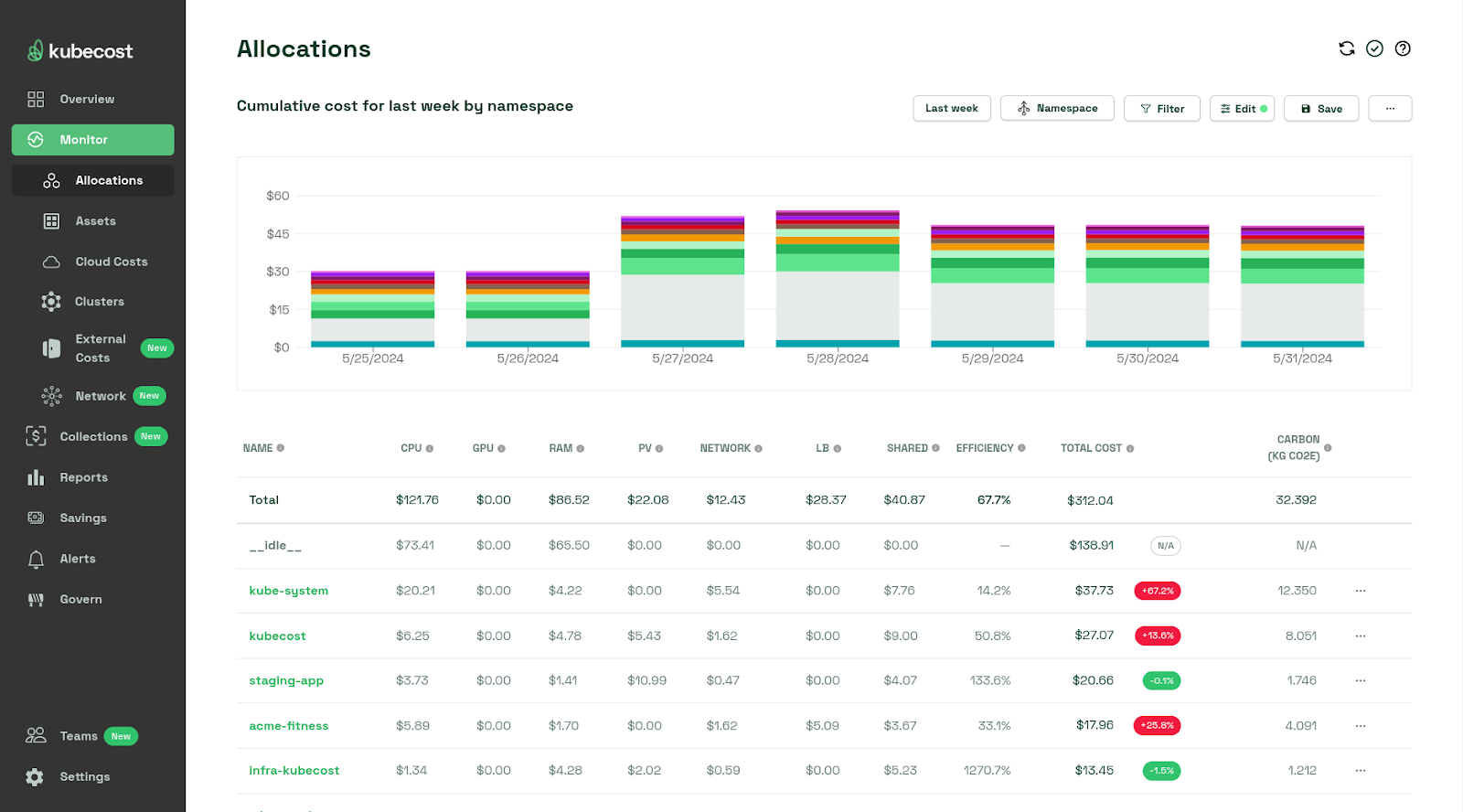

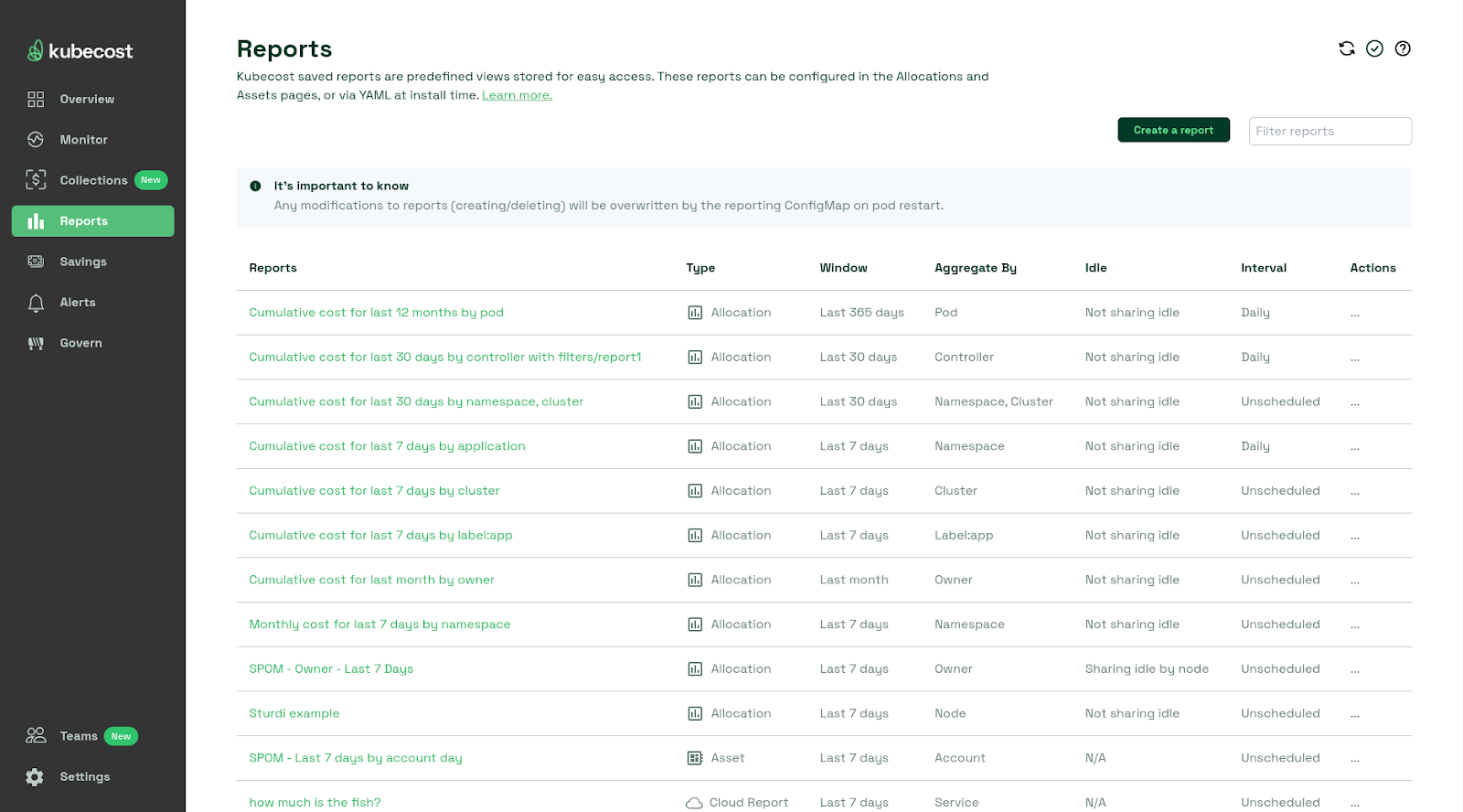

While labeling simplifies cost insights, accurately allocating costs in shared, autoscaled environments presents challenges.

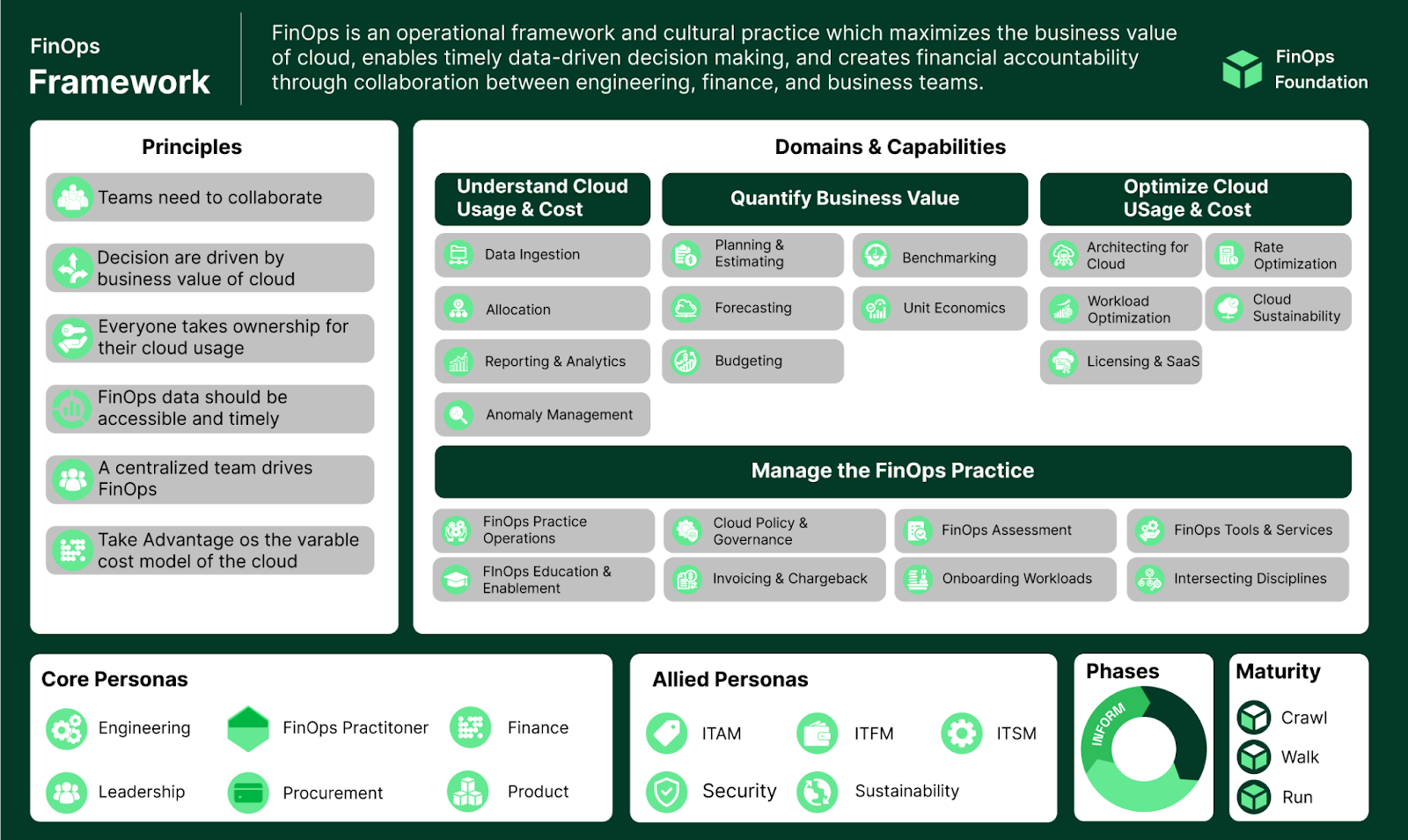

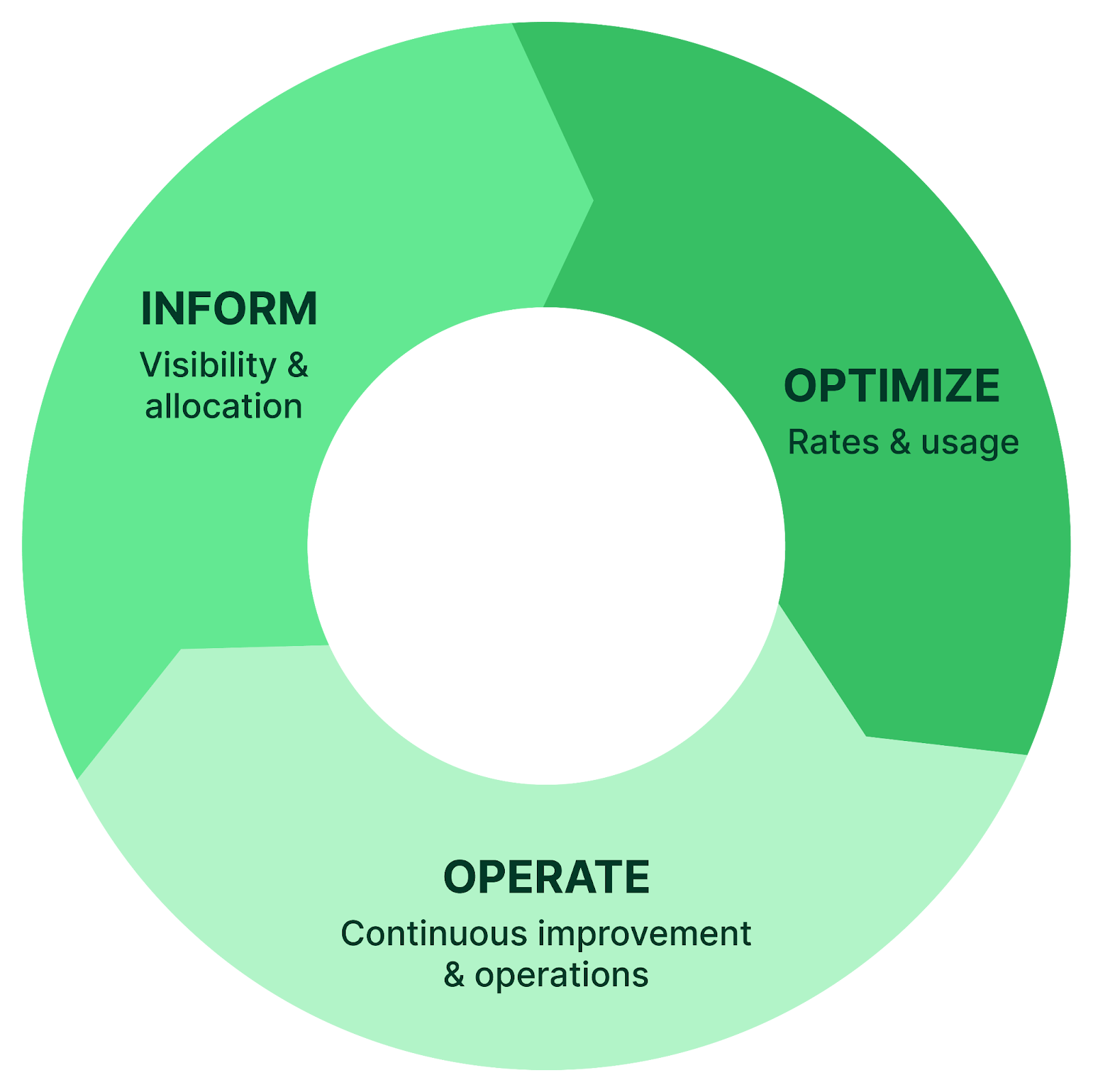

Cost allocation often involves assigning a tenant's proportional usage of resources, including CPU, GPU, memory, disk, and network. In this context, a concept like the Unit of Economics, which is the smallest measure of resource consumption that can be tracked and analyzed to understand costs and optimize spending, provides a framework for FinOps teams to concentrate on the costs associated with each tenant or project, enabling targeted efforts toward optimizing expenses.

Mapping Kubernetes cost to business value

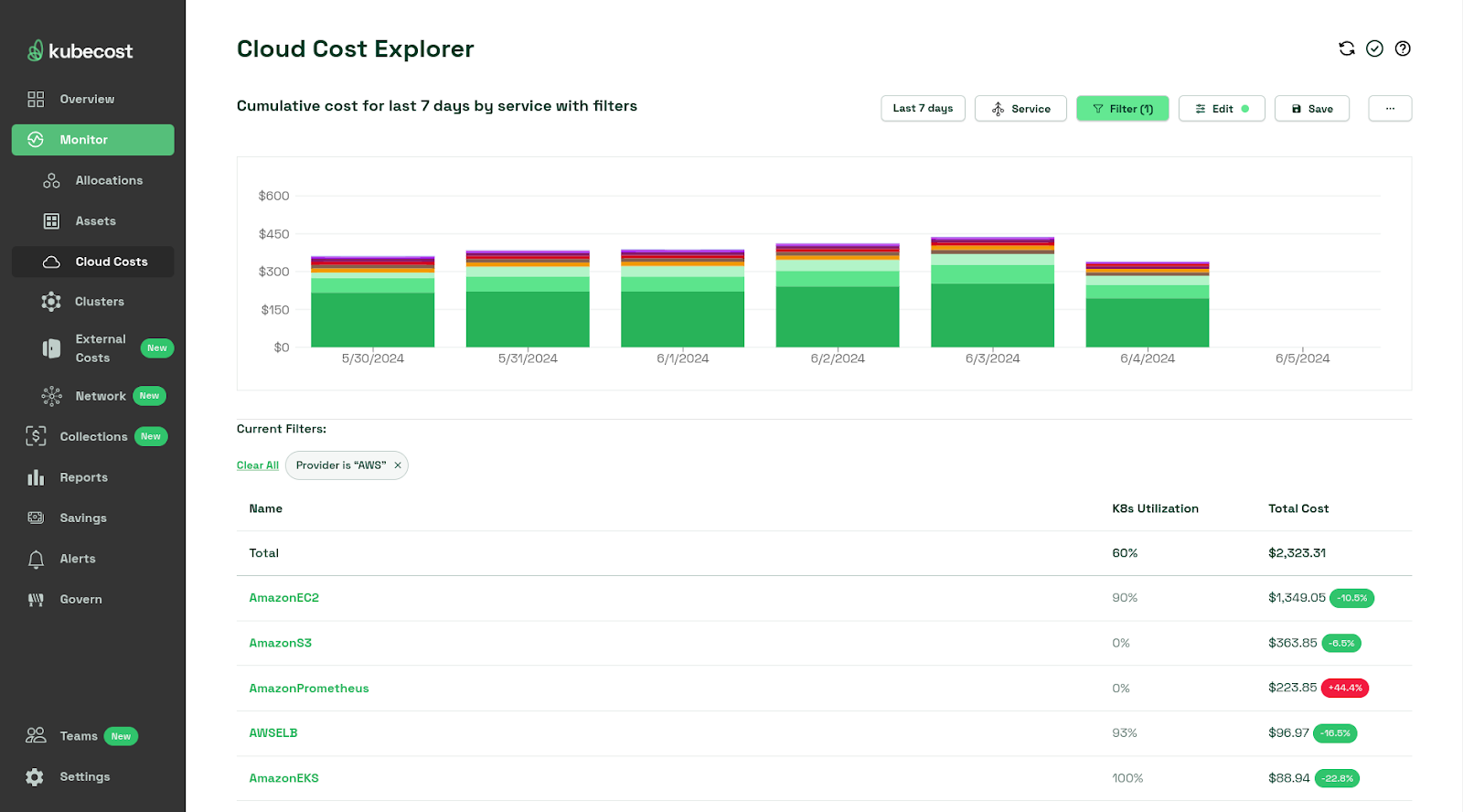

Mapping Kubernetes costs to understand business value involves considering various factors beyond container expenses. When allocating costs to consumers, it's crucial to account for compute node costs, operational expenses, storage costs, licensing fees, observability tools, and security services.

Management/cluster operational costs

Cloud service providers charge for managing the cluster, including self-managed container orchestrator nodes. Additional expenses may arise from edge services like web application firewalls and load balancers.

Storage costs

Containers consume storage, and while container storage may be temporary, the nodes’ host operating system and backup storage used to operate a production cluster should be allocated to workloads.

Licensing

Consider licensing costs for the host operating system and any software packages running on the host OS. Licensing fees may also apply to software used within containers.

Observability

Metrics and logs sent from the cluster to services like Splunk Cloud or Datadog incur costs. These costs should be allocated to teams utilizing the observability tools.

Security

Cloud service providers offer security-related services, which may come with additional costs. These expenses should be allocated to teams benefiting from enhanced security features.

Addressing the costs associated with containerization involves understanding both static and runtime expenses. Here’s a breakdown of these costs:

Static costs

- Solution creation: When creating a solution within a container, it’s essential to ensure its quality and assess how it impacts CPU, network, and storage upon deployment.

- Stateless and stateful containers: Static costs vary depending on whether the container is stateless or not.

Runtime costs

- Bandwidth: Often underestimated, bandwidth can significantly affect cloud computing charges.

- Forgotten deployments: A containerized application left deployed can lead to unexpected bills. It's crucial to remove applications or data from the cloud when they’re no longer needed.

- Polling data: Polling in the cloud is expensive and incurs transaction fees, which can add up based on frequency.

- Unintended traffic: DoS attacks or web crawlers can unexpectedly increase traffic. Implementing security audits and controls, like CAPTCHAs, can mitigate these costs.

- Monitoring: Regularly monitor application health and billing, review cloud necessities, and adjust deployments to match the load.

Allocating shared expenses

- Networking and storage: Assign these costs to specific projects, teams, namespaces, and applications.

- Autoscaled multi-tenant services: For architectures with autoscaled pods supporting multi-tenant services, map Kubernetes units like pods, deployments, or namespaces to an economic unit for cost calculation.

By establishing methods to allocate costs, FinOps teams can focus on the costs associated with single tenants, teams, or applications alongside other static and runtime costs, thus aligning Kubernetes expenses with actual business value. This approach ensures a comprehensive understanding of where resources are consumed and provides a basis for optimizing cloud expenditures.