Kubernetes provides unrivaled performance and flexibility, but that tends to go hand in hand with an increase in cost. It is commonly believed that containers can bring savings compared to the traditional approach of virtual machines, but in practice, that is rarely the case. Optimizing the underlying infrastructure and applications hosted on the clusters requires a conscious effort to ensure a cost-effective approach.

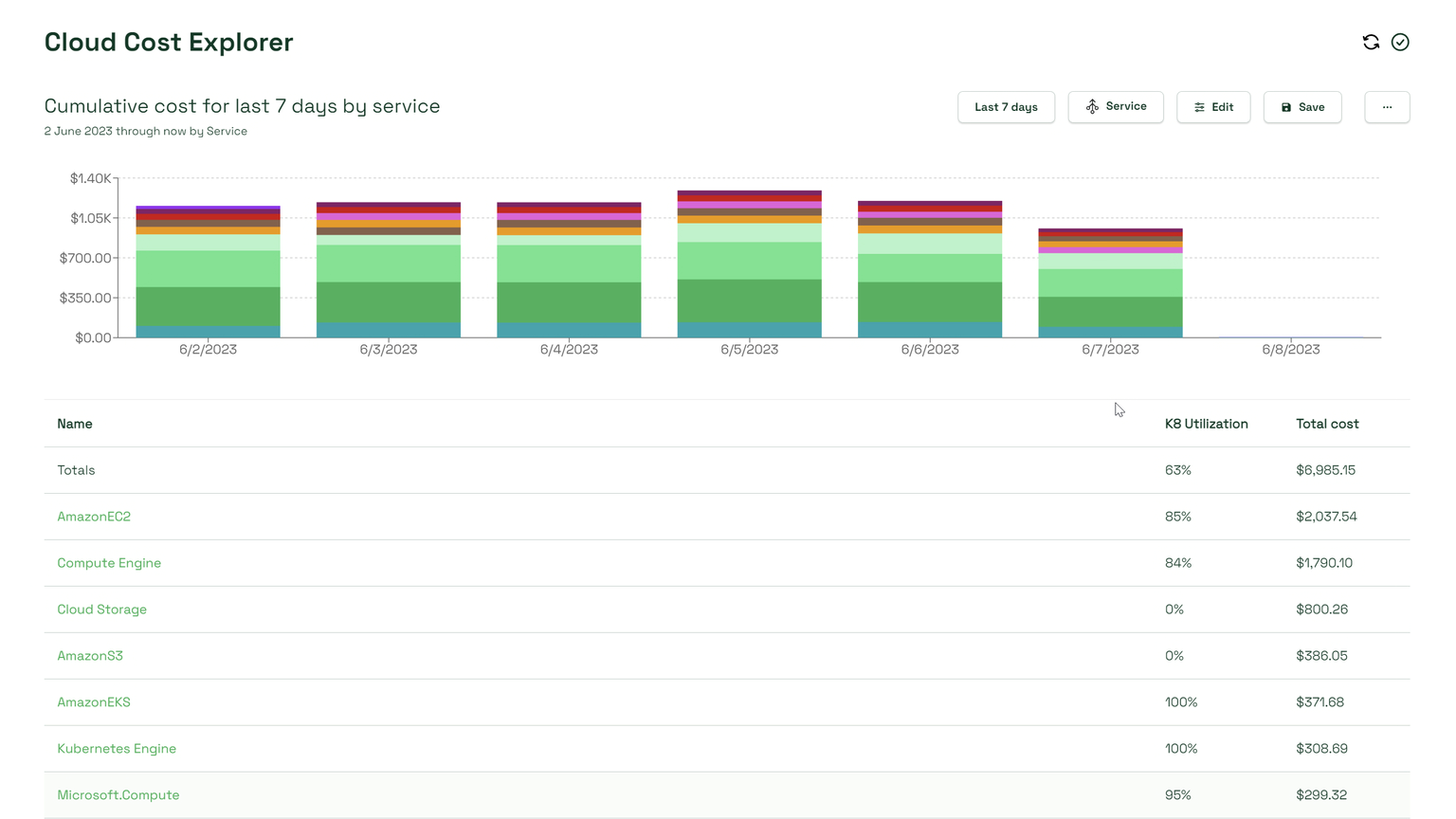

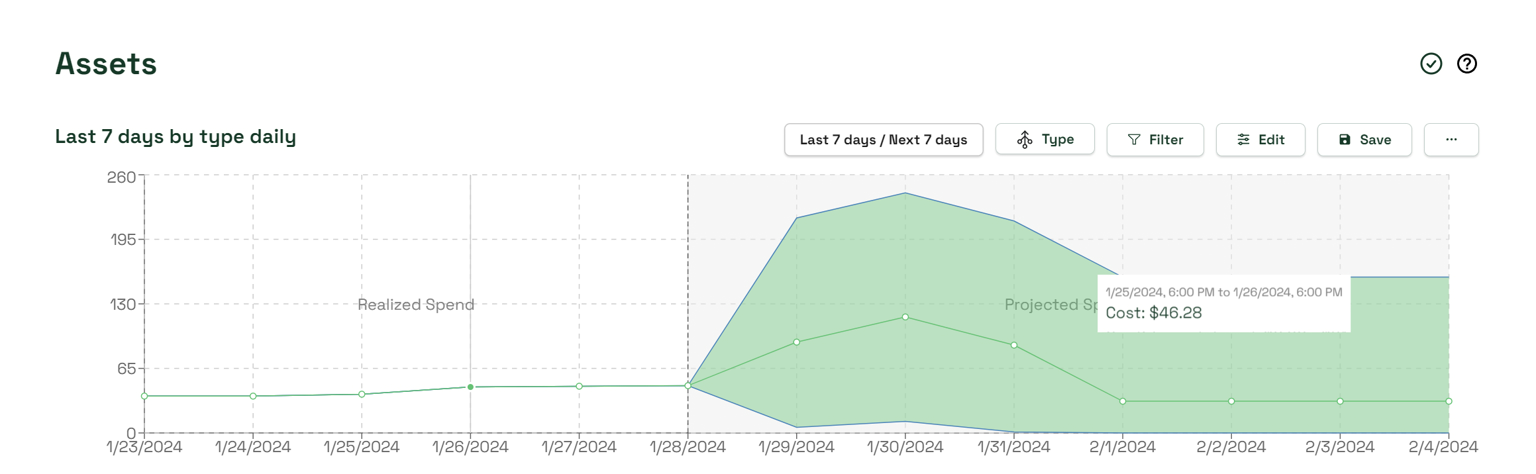

Typically, with limited insight into utilization patterns at the time of resource provisioning, application owners tend to over allocate resources. That is not an inherently wrong approach, but it should always be continually reviewed and optimization improved once more utilization data is available. A reliable monitoring solution, such as Prometheus, and tools to analyze utilization patterns, such as Kubecost, make the entire process much more accessible.

Optimization can take many forms. Monitoring and reviewing historical utilization data make it possible to determine accurate CPU and memory allocation for Kubernetes and maintain a balance between guaranteed resources (resource requests) and maximum usage (resource limit). The outcome of this exercise frequently leads to eliminating orphaned resources or changing the configuration of the underlying infrastructure. Determining key metrics and performance bottlenecks—such as CPU, memory, GPU, or other resources—is essential for establishing optimal node configurations. Cloud providers offer a wide range of VMs, each with different specifications and advantages, which must be considered when optimizing Kubernetes clusters.

Modern architectures on cloud providers make resource optimization more accessible. Autoscaling can provide additional resources on demand. A serverless approach abstracts the concept of virtual machines completely and allows administrators to focus on Kubernetes management. Cloud-managed Kubernetes services reduce the overhead of dealing with the Kubernetes control plane. Native integration with monitoring and logging services increases visibility without added complexity.

There is a series of articles diving deep into Kubernetes auto-scaling that expands on this topic: https://www.kubecost.com/kubernetes-autoscaling/

Here are some components of a resource optimization strategy that are worth keeping in mind when working with Kubernetes clusters:

- Monitor and adjust CPU and memory requests and limits to align with usage.

- Analyze historical usage data to set optimal resource allocations and avoid overprovisioning.

- Use the Kubernetes Cluster Autoscaler (or alternative solutions, such as Karpenter) to adjust the number of nodes based on cluster load, adding nodes when needed and removing them when underutilized.

- Consolidate workloads onto fewer nodes during low-demand periods to maximize resource utilization.

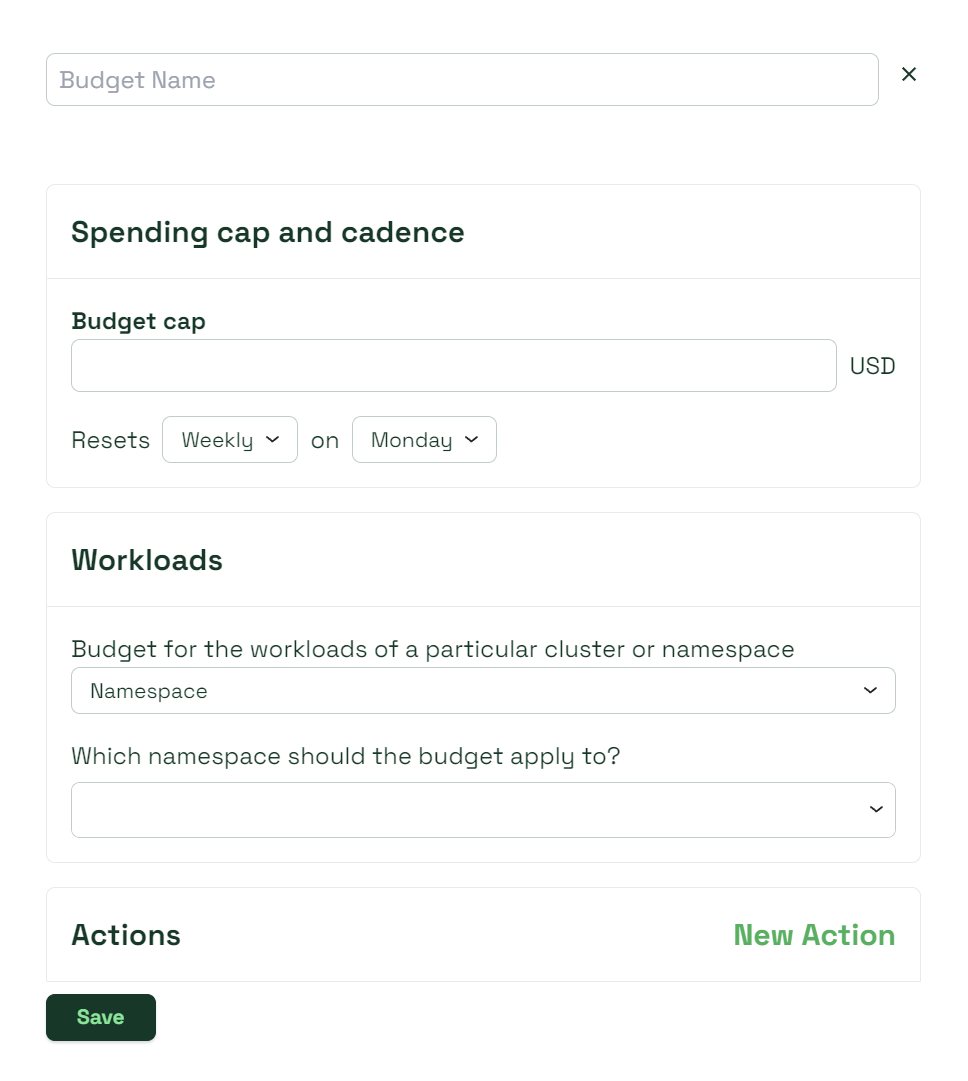

- Set resource quotas and limits for namespaces to control and manage resource consumption effectively.

- Implement the Horizontal Pod Autoscaler (HPA) to scale the number of pods based on workload demands automatically.

- Use the Vertical Pod Autoscaler (VPA) to adjust pod resource requests and limits. This method requires the pods to be restarted automatically to take advantage of the new settings.

You can learn more about implementing the HPA and VPA in this article on the Kubernetes Metrics Server.

External tools certainly make the optimization process much more straightforward. If Kubernetes clusters are hosted on cloud providers such as AWS, some services are available out of the box. AWS users can utilize AWS Cost Optimizer and AWS Trust Advisor to gain insights into potential resource right-sizing.

External tools can serve a similar purpose through a much more granular and user-friendly approach. They excel at analyzing cloud metrics and providing accurate optimization recommendations. Such optimization is based on cloud metrics, though, so it may not be sufficient for Kubernetes clusters. Adding Kubecost would provide additional insights into Kubernetes resources and serve as a robust stack to optimize a Kubernetes-based infrastructure.

The general rule of effective optimization is that it should lead to reduced costs and improved performance rather than sacrificing one for the other.