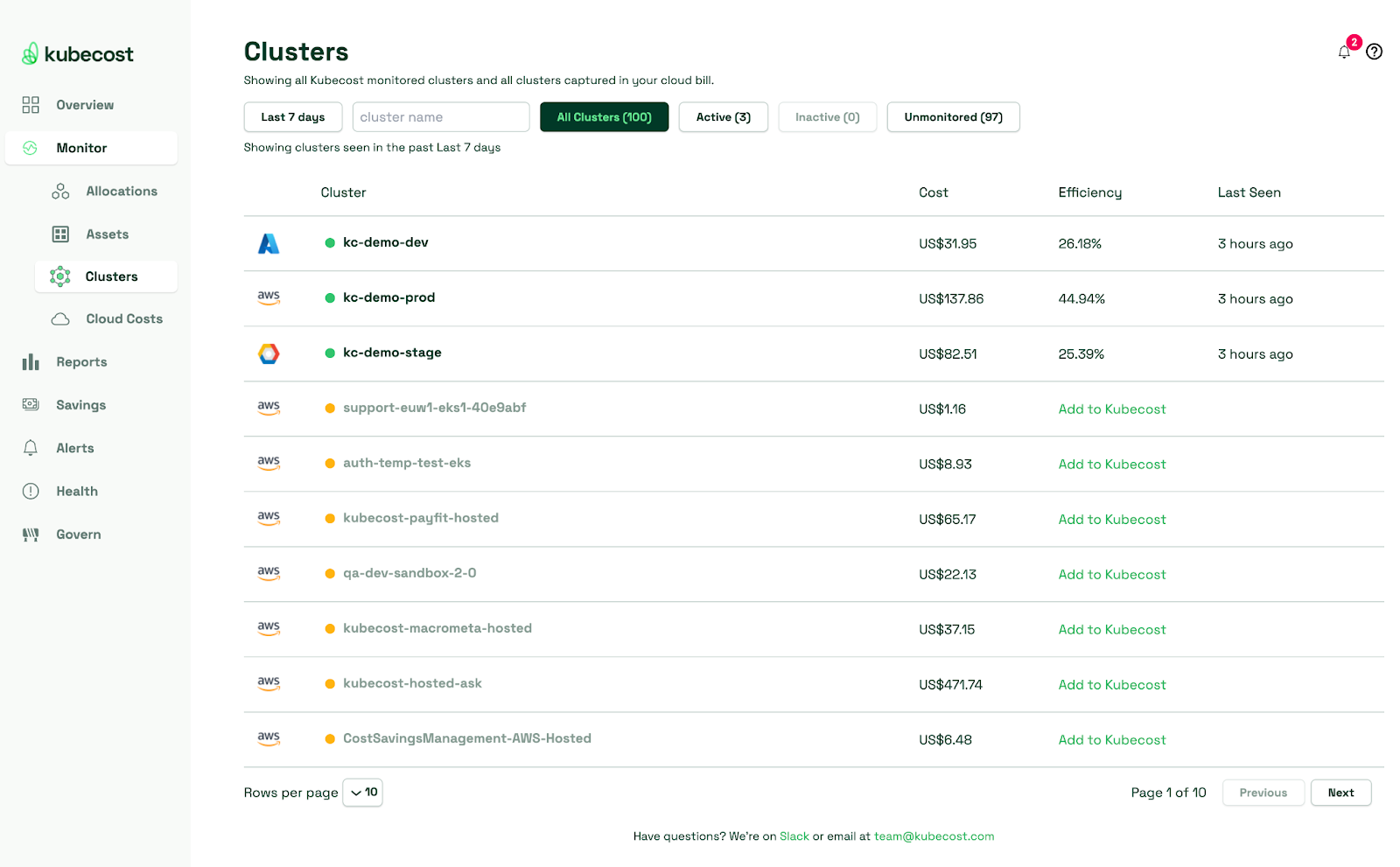

Costs can be kept down by not immediately ingesting any metrics that become available for ingestion from the Kubernetes cluster; instead, as described in the previous section, build incrementally and scrape metrics as needed. In addition, costs can also be monitored in detail by using managed platforms like kubecost that use Prometheus behind the scenes to scrape for Kubernetes metrics(i.e., “node_total_hourly_cost”) and illustrate cost graphs in multiple breakdowns that allow for enhanced visibility.

Set up alert rules

You can set up alerts for any metric Prometheus collects. To do this, create a YAML file, such as alert_rules.yaml, and populate it with your alert rules. Next, include this file in the rule_files section of your primary prometheus.yml configuration file, which will integrate it into Prometheus for monitoring, for example:

rule_files:

- alert_rules.yaml

Alerts must be clearly defined, and setting up such alerts must be done with suitable thresholds. As a best practice, define alerts that pinpoint potential issues by establishing thresholds for metrics like CPU, memory, or network error rates. Some example alerts could be the following:

- Alert when RAM goes beyond a specified limit for an individual pod, node, or for the Kubernetes Cluster as a whole

- Alert on individual Kubernetes resources, such as when there is latency in the Kubernetes API itself

- Configure alerting on individual jobs in the cluster in case they fail or if they have been running longer than expected.

- An alert could be created to trigger when a pod’s, node’s or the cluster’s CPU usage surpasses a certain percentage. An example configuration of

alert_rules.yaml containing such an alert which is triggered when the CPU goes over a threshold in a pod, can be seen here:

groups:

- name: kubernetes-alerts

rules:

- alert: ClusterCPULoadHigh

expr: sum(kube_pod_container_resource_limits{resource="cpu"}) - sum(kube_node_status_capacity_cpu_cores) > 0

for: 5m

labels:

severity: warning

annotations:

summary: "High CPU load across the cluster"

description: "CPU utilization in the cluster exceeds available capacity for the last 5 minutes."

In this example, an alert named “ClusterCPULoadHigh'' is defined, which is only triggered if the “expr” PromQL query holds true for the duration of the “for” value, which is five minutes. The “expr” query will calculate the total number of CPU limits across all pods in the cluster, and compare it with the sum of CPU limits across all nodes in the cluster. If the result is positive, the alert will trigger, indicating high CPU utilization in the cluster. A very good starting point on what metrics are available from Kubernetes can be seen in the official documentation and the Kubecost metrics page.

All these metrics can be configured depending on the needs of each use case. By establishing these straightforward alerts and thresholds, Prometheus will actively monitor your Kubernetes system and apps and promptly notify you of deviations, ultimately helping prevent potential performance issues and enabling timely actions from platform teams. These actions will help maintain a healthy Kubernetes environment.

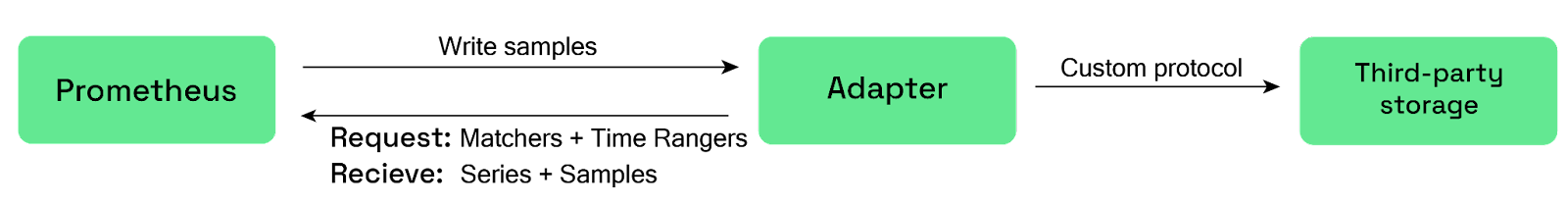

Implement proper data retention

The storage system in Prometheus is a time series database(TSDB) designed specifically for handling and storing time-series data collected from monitored systems and applications.